Balancing Knowledge Sharing and Security

In the architecture of modern enterprise intelligence, we are increasingly confronted by a structural conflict: the drive for collective intelligence versus the mandate for data sovereignty. Within an IT/AI Stack, "Open Information Sharing"—or Superdistribution—serves as a high-velocity engine for organizational learning. However, this same mechanism introduces a catastrophic strategic vulnerability. From an information security standpoint, the very fluidity that facilitates learning simultaneously demolishes confidentiality.

The trade-off is absolute, as underscored by the source context:

"Open Information Sharing (Superdistribution) is great for sharing knowledge with many—but for that very reason, it is catastrophic for confidentiality."

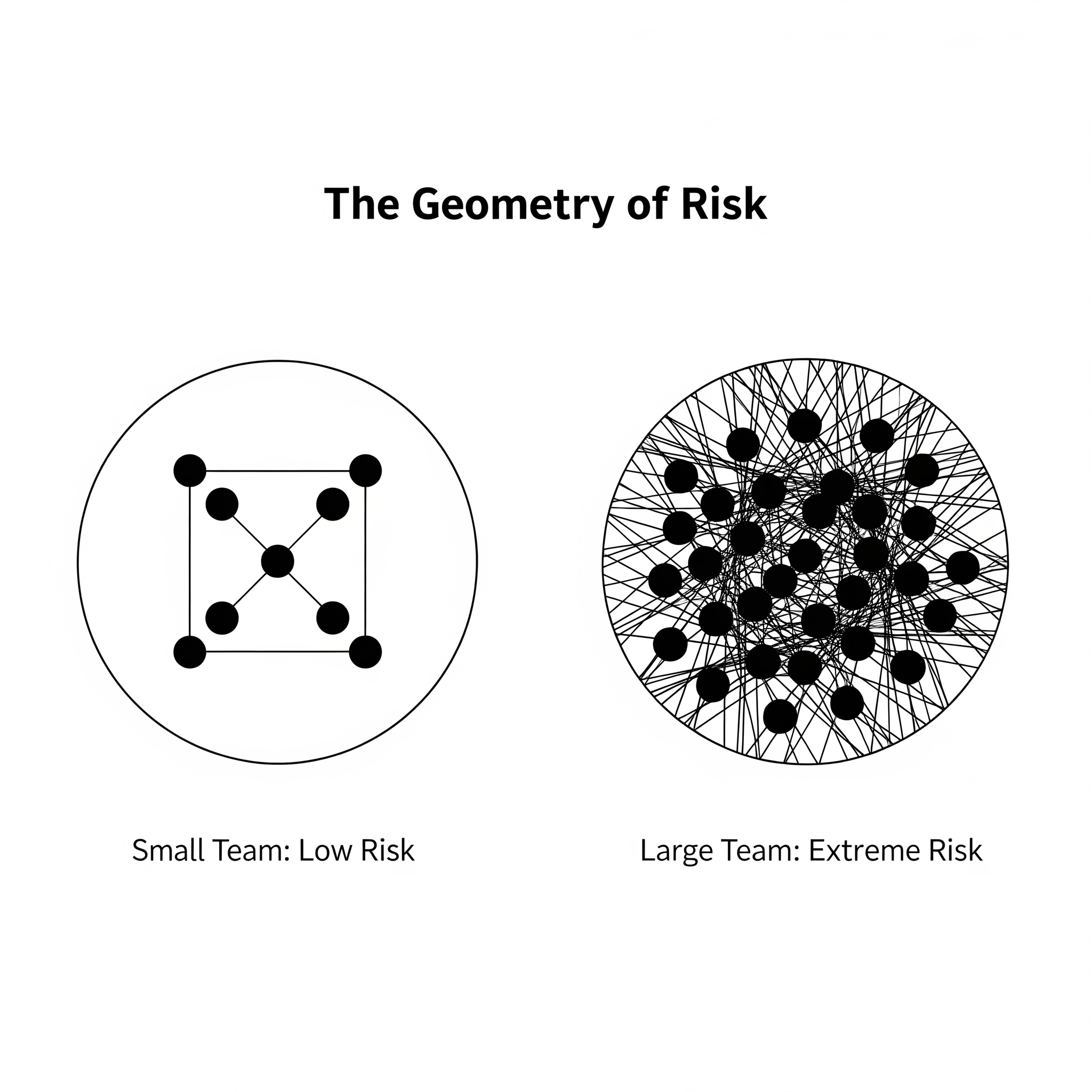

This tension is not a failure of policy, but a symptom of the underlying geometry of team structures. As we scale the human element, the risk profile shifts from manageable to uncontainable.

Maintaining confidentiality in a growing environment is a mathematical impossibility without structural intervention. The relationship between the number of participants (nodes) and the potential communication paths (lines) is non-linear. As we add personnel, we are not just adding voices; we are facilitating vector proliferation across an exponentially expanding attack surface.

| Team Size (Nodes) | Communication Paths (Lines) | Complexity & Security Impact |

|---|---|---|

| 3 People | 3 Lines | Minimal: Information is easily compartmentalized. |

| 6 People | 15 Lines | Moderate: Fivefold complexity increase over a 3-person node. |

| 12 People | 66 Lines | Severe: Attack surface exceeds manual oversight capabilities. |

The geometry of these communication lines forces every IT and AI environment into one of two mutually exclusive states.

| State | Strategic Outcome | Architectural Impact |

|---|---|---|

| State 1: High Information Density | Optimized for Learning | High-velocity sharing creates a rich environment for collective intelligence but zero-containment. |

| State 2: High Communication Complexity | Deficient for Confidentiality | The density of interaction paths creates too many leak points, making "need-to-know" protocols unenforceable. |

Navigating this conflict requires the implementation of specific "Strategic Guardrails" to enforce boundaries within the AI deployment.

To secure a modern IT / AI Stack, architects must implement two fundamental rules. These rules function as a "kill switch" for the complexity described above, where Rule 1 acts as the sensor and Rule 2 as the actuator.

By adhering to these rules, the architect ensures that even a sophisticated AI stack remains auditable and destructible at any point in its lifecycle.

The definitive solution to the scale complexity paradox is a strategic pivot from "General Purpose" systems to "Single-Purpose AI" deployed in contained environments. We must move away from the unmanaged web of communication toward selective involvement and dedicated workflows.

Securing the future of high-speed information sharing rests on three pillars: